- Sphere Engine overview

- Compilers

- Overview

- API

- Widgets

- Resources

- Problems

- Overview

- API

- Widgets

- Handbook

- Resources

- Containers

- Overview

- Glossary

- API

- Workspaces

- Handbook

- Resources

- RESOURCES

- Programming languages

- Modules comparison

- Webhooks

- Infrastructure management

- API changelog

- FAQ

As we discussed in the previous chapter, the system verifies the correctness of the submissions using components called judges. There are two types of judge programs:

- test case judge - responsible for assessing the submission in the context of a single test case,

- master judge - responsible for summarizing the results from individual test cases

Content managers can choose a judge among multiple built-in judge programs that are supported on Sphere Engine. In many cases the supported judges can be used instead of writing a new one. If the programming problem requires a non-standard method of evaluation, then the content manager can implement their own judge. This is possible in each programming language offered by the Sphere Engine system.

In this section you can find out more about implementing your own test case and master judges.

To view the source code please go directly to the Judges and Masterjudges for Sphere Engine service GitHub repository.

Implementation of a test case judge

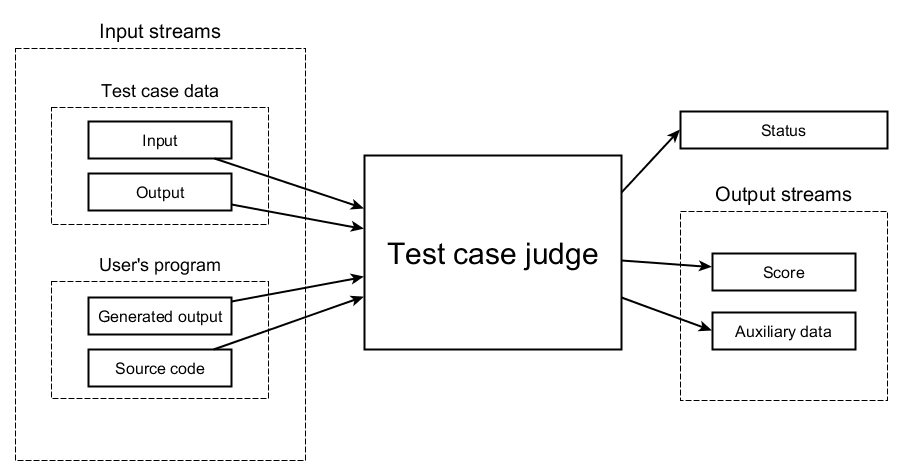

The task of a test case judge is to evaluate the submission in the context of a single test case.

The assessment of the submission consists of determining whether it is correct (by establishing the so-called verdict) and - optionally - in giving a score. The judge program makes a decision based on the test case data and the result of executing the user's program.

Dependencies are depicted in the diagram below:

The test case judge has access to several data streams. Each stream is accessible through a so-called file descriptor which is an integer.

| Name | File descriptor |

|---|---|

| Test case input data | 0 |

| Test case output data | 4 |

| User's program output data | 3 |

| User's program source code | 5 |

| Name | File descriptor |

|---|---|

| Score | 1 |

| Auxiliary/debug stream | 6 |

| User's program input data (available only for interactive problems) |

8 |

Information about the programming language identifier in which the user's program is implemented is available in the

environment variable of the namecompiler (necessarily

lowercase). The value is consistent with the list of

available programming languages.

Validation of the submission

Submission correctness in the context of a test case is determined by the so-called verdict. Verdict information is

determined by using the end signal of the program. The value of the end

signal is interpreted as follows:

- 0 – the submission correctly solves the test case (

accepted), - 1 – the submission is incorrect (

wrong answer)

For example, in C, the end signal is the value returned by the main function of the program (i.e., the function named

main).

Note: The test case judge is only able to specify two of many different verdicts (i.e., accepted or

wrong answer). Other verdicts (e.g., compilation error, runtime error, time limit exceeded) are set

automatically by the Sphere Engine system.

Score

In the case of optimization problems or problems assessed partially, an important factor in addition to solution correctness is its quality. In the case of problems of this type, the author of the judge program has the ability to determine the score. The score is presented in the form of a number (potentially a floating point number).

The score should be written to the appropriate stream (descriptor number 1). No other information should be written to

the stream with the score.

In many cases, there is no need to determine the score. Very often verification of the submission is limited to determining whether it is correct or incorrect.

Examples

The sample source codes of test case judges are available on GitHub:

Problem description

For given function

ffind its root i.e., the argumentx0thatf(x0) = 0.In general, there are many solutions to the problem, for example for polynomial

x2 + x - 2the numbers1and-2are both correct answers.You can see that it is difficult to prepare model output file in the test case. There is a possible infinite number of solutions for certain functions. Therefore, it is impossible to keep all of them in the output file. It forces us to use a different approach.

Test case judge description

The test case judge should verify the condition from the problem task i.e., for the user's answer from the output file (

descriptor number 3) it should check if that answer is a root of the given function. Test case judge uses its access to the input file (descriptor number 0) to read the function defined in the input data.

Problem description

For a given graph

Gwith n vertices1, 2, ..., ndetermine if it has a Hamiltonian cycle (i.e., closed loop through a graph that visits each node exactly once). If the Hamiltonian cycle exists print it as a sequence of vertices.It is easy to see that

1-2-3-1is the same cycle as2-3-1-2. We could add the requirement to start with the smallest vertex number. Unfortunately, it is possible that there exist many different Hamiltonian cycles which are not cyclic shifts. We could again use the previous approach (from Example 1) to verify if the user's answer is a really Hamiltonian cycle.Alternatively, we can build a model output file with all possible Hamiltonian cycles.

Test case judge description

For the user's output file (

descriptor number 3), the test case judge verifies if the sequence of vertices is present in the list that is part of the model output file.

Important: It can be problematic to keep all answers due to a possibly huge number of good solutions.

Implementation of the master judge

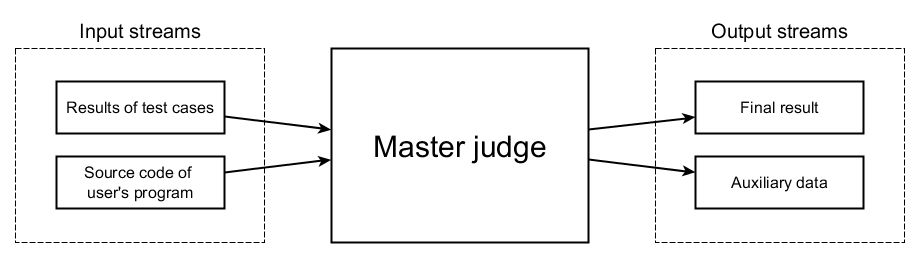

The master judge is responsible for summarizing individual test case results. Based on partial results it determines the final verdict consisting of the following parameters:

- the final verdict of the submission (e.g.,

acceptedorwrong answer), - final score,

- overall execution time,

- overall memory consumption.

The final verdict is determined based on the data from the test cases and (if requirements or restrictions are introduced) also based on the user's program source code.

Dependencies are depicted in the diagram below:

The master judge (like the test case judge) has access to several data streams. Each stream is accessible through a so-called file descriptor, which is an integer.

| Name | File descriptor |

|---|---|

| Results of test cases | 0 |

| Custom data (optional) | 3 |

| User's program source code | 5 |

| Name | File descriptor |

|---|---|

| Final results | 1 |

| Auxiliary/debug stream | 6 |

Information about the programming language identifier in which the user's

program is implemented is available in the environment

variable of the

namecompiler (necessarily lowercase). The value is consistent with the list of

available programming languages.

The format of results from individual test cases

The partial results obtained during the processing of consecutive test cases are

recorded in the appropriate stream (descriptor number 0). The data of each

test case is written in a separate line of the stream and takes the following

form:

N SCORE SIGNAL TIME MEMORY STATUSFor example:

0 AC 10 0 0.57 8096The individual parameters have the following meaning and format:

| Name | Meaning | Format |

|---|---|---|

| N | test case number | integer the numbering starts with the number 0 |

| STATUS | verdict | the following abbreviations are used:

|

| SCORE | score | a number in the X.xx format (e.g., 2.00, 1345.00, 12) |

| SIGNAL | the end signal returned by the user's program | an integer |

| TIME | CPU time | a number of seconds in the X.xx format (e.g., 2.00, 1345.00, 12) |

| MEMORY | maximum memory consumption | a number of kilobytes (integer) |

Obtaining a detailed report from processing a submission requires that the

partial results be redirected to the auxiliary stream (descriptor number 6).

The data must retain the following format:

test N - STATUS (score=SCORE, sig=SIGNAL, time=TIME, mem=MEMORY)For example:

test 0 - AC (score=10, sig=0, time=0.57, mem=8096)The final result and its format

The author of the master judge is free to determine the final result parameters. For example, the final submission execution time can be determined as the sum of the execution times of individual test cases. Alternatively, the final execution time can be determined as an average value

The final result should be written to the appropriate stream (descriptor number 1). The data format is as follows:

STATUS SCORE SIGNAL TIME MEMORYFor example:

AC 10 0 0.57 8096Individual parameters have meaning and a format similar to the parameters from the previous table. Nevertheless, all values are calculated by the master judge in the manner specified by its author:

| Name | Meaning | Format |

|---|---|---|

| STATUS | final verdict | the following abbreviations are used:

|

| SCORE | final score | a number in the X.xx format (e.g., 2.00, 1345.00, 12) |

| SIGNAL | the end signal | an integer |

| TIME | execution time | a number of seconds in the X.xx format (e.g., 2.00, 1345.00, 12) |

| MEMORY | memory consumption | a number of kilobytes (integer) |

Examples

Here we present the generalization of Score is % of correctly solved sets master judge. It is a small disadvantage that each test case is worth the same, and to increase the influence of some submission's aspect we are forced to produce many test cases.

For example, when our test cases verify three aspects

A,B, andCof the problem and we would like to apply weights of20%,30%, and50%respectively, we are able to achieve this by creating10test cases. Two of these are responsible for an aspectA; three are responsible for an aspectB; and five are responsible for an aspectC. However it is inconvenient and we can consider the following idea:Master judge description

Master judge has information about the number of test cases and weights, which it should assign to each test case. The final score is the weighted sum of accepted test cases.

For instance, for three test cases

a, b, cand weights20%, 30%, 50%the submission gets one of the possible results depending on passed test cases: - no test case passed - 0% - a - 20% - b - 30% - a, b - 50% - c - 50% - a, c - 70% - b, c - 80% - a, b, c - 100%

The content manager may require that the solution cannot use some programming structures. For example, they may want to allow usage of language C++ but with no access to the STL library, in order to force users to implement efficient data structures manually. Another example is to restrict source code to not use loop structures, to support only solutions based on recursion.

Master judge description

Master judge uses access the user's source code to detect usages of forbidden keywords (for example loop types: while, for, goto). When a forbidden keyword is detected the final verdict is set to the

wrong answer. In other cases, the master judge performs classical verification (for example the same as Generic master judge or Score is % of correctly solved sets).

We cannot directly support memory limit due to the reasons explained in memory complexity section of the Related topics. To make bounding the amount of available memory possible we can implement a master judge specifically for this purpose.

Master judge description

Master judge gathers the information of consumed memory from test case judges. We can verify the memory limit with respect to the user's solution programming language to adjust the master judge for all programming languages we allow to use.

Important: It's very important to adjust the memory limit to the programming language due to different memory needs of programs in different languages.